Hello. I am a scientist & engineer at Google DeepMind.

I am on the Gemini core team working on RL and reasoning.

Recently, I was model co-lead & captain of the Gemini Deep Think model that achieved the IMO Gold Medal 🥇.

Previously, I co-led early LLM efforts at Google Brain including PaLM-2, UL2 and Flan-2. While I was a researcher for most of my professional life, I also took a short break to be a co-founder of a "unicorn" AI startup in 2023-2024.

I am interested in getting humanity to AGI and my main research focus is to find game changers to get us there.

As a side quest, I am also building out Google DeepMind Singapore together with Quoc Le where I will found and lead a new GenAI (Gemini core) team here.

2025 introspections: my year back at Google

Reflecting on 2025 and my one year back at Google Deepmind’s Gemini team. From being back to research to winning the historic IMO gold medal, along with starting GDM Singapore, I reflect on what a wild year it has been.

Returning to Google DeepMind

Returning to Google and recounting my experiences as a startup co-founder.

What happened to BERT & T5? On Transformer Encoders, PrefixLM and Denoising Objectives

A Blogpost series about Model Architectures Part 1: What happened to BERT and T5? Thoughts on Transformer Encoders, PrefixLM and Denoising objectives

Training great LLMs entirely from ground up in the wilderness as a startup

Chronicles of training strong LLMs from scratch in the wild

2022 in Review: Top language AI research papers + interesting papers to read

Here are some of the best language AI / NLP papers of 2022!

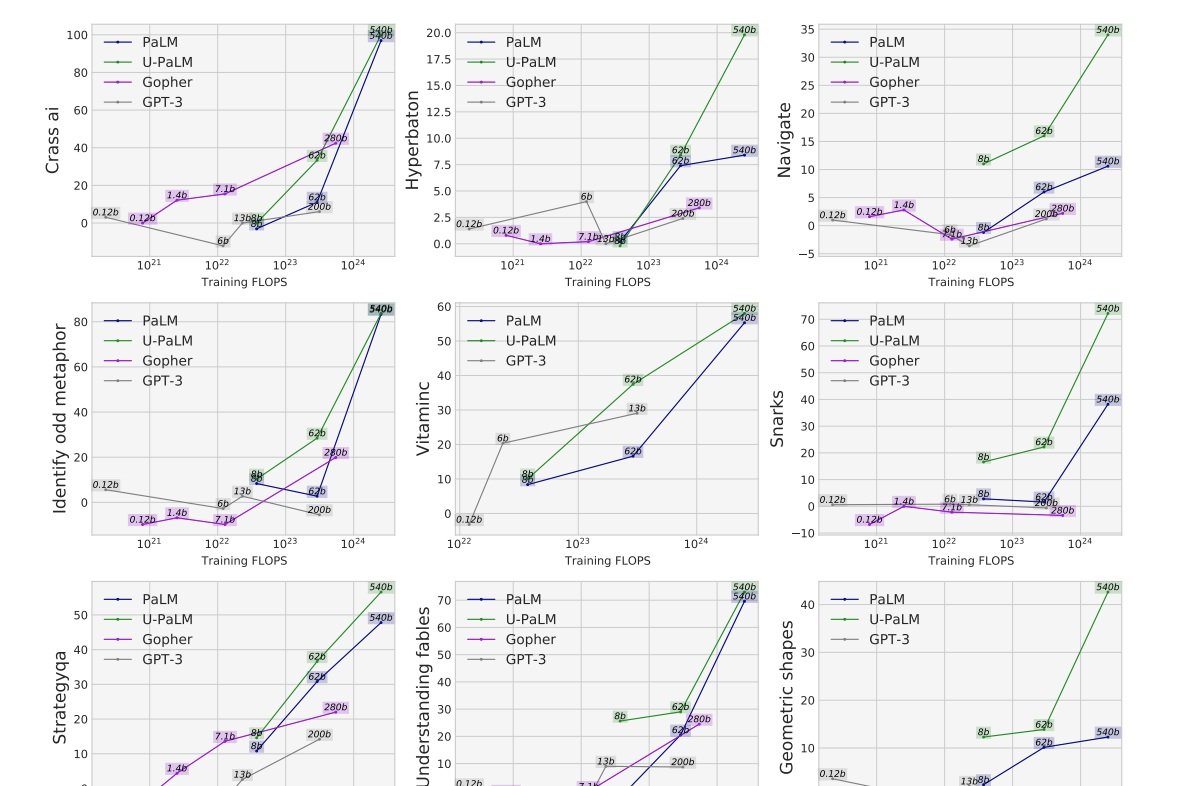

On Emergence, Scaling and Inductive Bias

Some thoughts on emergent abilities and scaling language models.